Introduction

The purpose of this lab is to be introduced to advanced classification algorithms. Advanced classifiers offer increased accuracy of remotely sensed images over other traditional classifiers such as unsupervised and supervised classifications. For the purpose of this lab an expert system/decision tree classification with the use of ancillary data and the development of of an artificial neural network to increase the accuracy of image classification.

Part 1: Expert System Classification

The section of this lab was to use an already classified image and improve upon its classification accuracy (fig. 1). The first step of this process it to build knowledge to train the classifier. The knowledge used to train the classifier can include data that is not contained within the image itself. Such data includes parcel data, census block data, and soil type etc. For this lab we were given a previously classified image. The image we were given had multiple errors throughout the image. There were areas within the city that were misclassified. There were areas such as parks and cemeteries that were classified as being agricultural land (fig 2).

|

| Figure 1. Original Classified image for Eau Claire and Chippewa Falls |

|

| Figure 2. Area within the city that has areas that are misclassified. The areas of pink in the northeast section of the image is a graveyard that was misclassified to agriculture. |

To improve upon the accuracy of the image by reclassifying the misclassified pixels. This was done in the "knowledge builder" tool in Erdas Imagine. The process can be broken down into three main components, the hypothesis, the rules and the variables. The rules function communicates the relationships between the image classes and the other data. The variable function is where the final outputs are stored.

|

| Figure 3. Knowledge builder used to correct the original image. |

The next section of the lab we incorporated ancillary data into the model to increase the accuracy of the original classification. This was done in the knowledge builder tool again in Erdas Imagine. For this section there were more classes created to better separate the different LULC classes through the Eau Claire/Chippewa Falls area. These extra classes include Vegitation 2, Agriculture 2, Residential, and Other Urban.

|

| Figure 2. Knowledge engineer tool used to reclassify the new classification |

Once the model was built (fig. 4) ancillary data was then added as a knowledge base file. Once this data was incorporated into the model, the model was then run and a corrected image was then created (fig. 5). Both agriculture and vegitation classes were combined into a single classes to reduce complexity in the final image (fig. 5).

|

| Figure 3. Corrected Image using expert system classification |

Part 2: Artifical Neural Network Classification

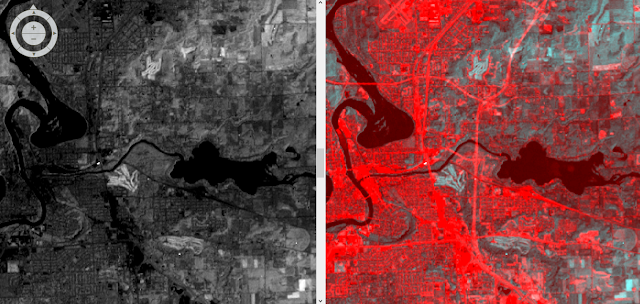

The next section for lab evolved the use of an Artificial Neural Network (ANN) to classify an image in ENVI. The ANN works similar to that of the human brain where the computer learns from the inputs that is given. This is done through the use of input, hidden, and output layers. Hidden layers convert input layers into products that the output layers can use. We were given an image that covers the UNI campus. In order for the ANN to work, training samples needed to be collected. For the purposes of this lab we were given multiple Regions of Interest (ROIs) that were to be used to train the ANN (fig. 4). Once the ROIs were brought into the image, the

Neural Network tool was used to run the ANN and create a classified image (fig. 5)

|

| Figure 4. False color image of the UNI campus with the three different ROIs used to train the ANN. |

|

| Figure 5. Classified image of the UNI campus created from the ANN classification. |

Conclusion

Advanced Classifiers help image analysts enhance image classification of both supervised and unsupervised classifications. Advanced classifiers such as expert systems and neural network help delineate classes more accuratly through the use of computer learning and ancillary data.