The objective of this is to use two different forms of land use/land cover (LULC). The first form of change detection used was the a qualitative visual assement, the second was a quantifiable post-classification change detection.

Methods

Part 1: Change detection using Write Function Memory Insertion

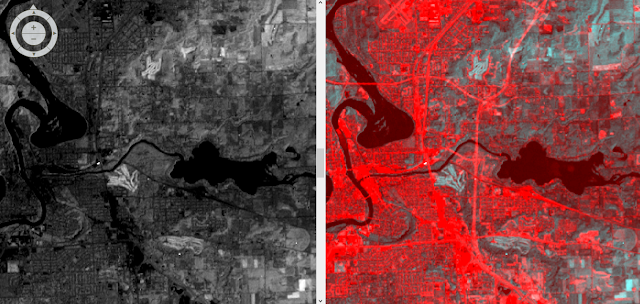

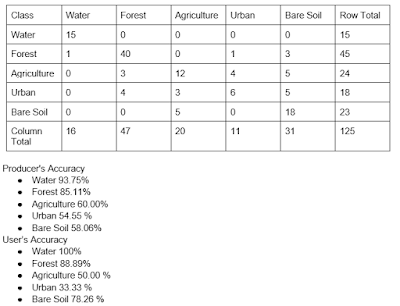

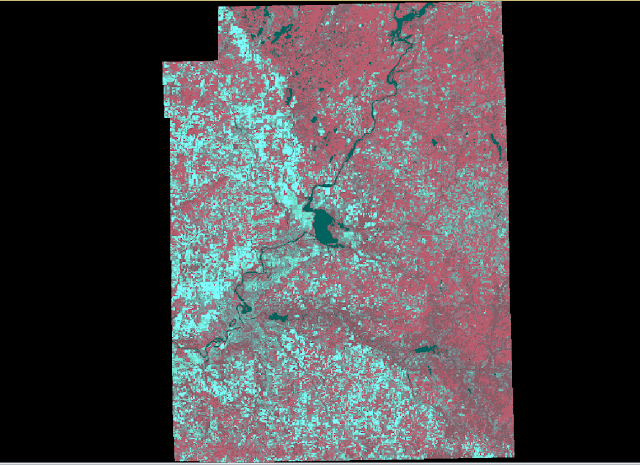

The first change detection method used in this was the write function change detection method. This method uses near-infrared bands over different dates placed into different bands (fig. 1). For this lab two images covering western Wisconsin were used. One image from 1991 and the other image from 2011. When the bands of the image were stacked in the combination shown below, areas that had seen change had pink tint (fig. 2). This method allows for an analyst to quickly visually inspect an area an see areas of change in a qualitative sense. While this method does show LULC change it fails to explain to the user which specific LULC classes have changed or the amount change that has occurred.

|

| Figure 1. Color gun combination used to complete the Write Function change detection |

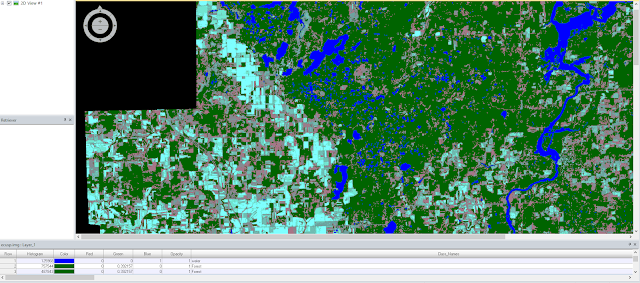

For the section of the lab, we were given two classified LULC images for the Milwaukee metropolitan area for both 2001 and 2011. The area of each of the LULC classified images was recorded in meters and converted to hectares in excel (fig. 2). The percent change for each of the classes was then calculated for each of the LULC classes.

|

| Figure 2. Excel sheet displaying the acreage for each of the LULC |

|

| Figure 3. The Wilson-Lula Algorithm used to determine how the LULC had changed between 2001 and 2011. |

Part 1: Change detection using Write Function Memory Insertion

|

| Figure 4. Map created using ArcMap showing how the LULC for the Milwaukee metropolitan area had changed between 2001 and 2011. |

The images for this lab were provided by Dr. Cyril Wilson.